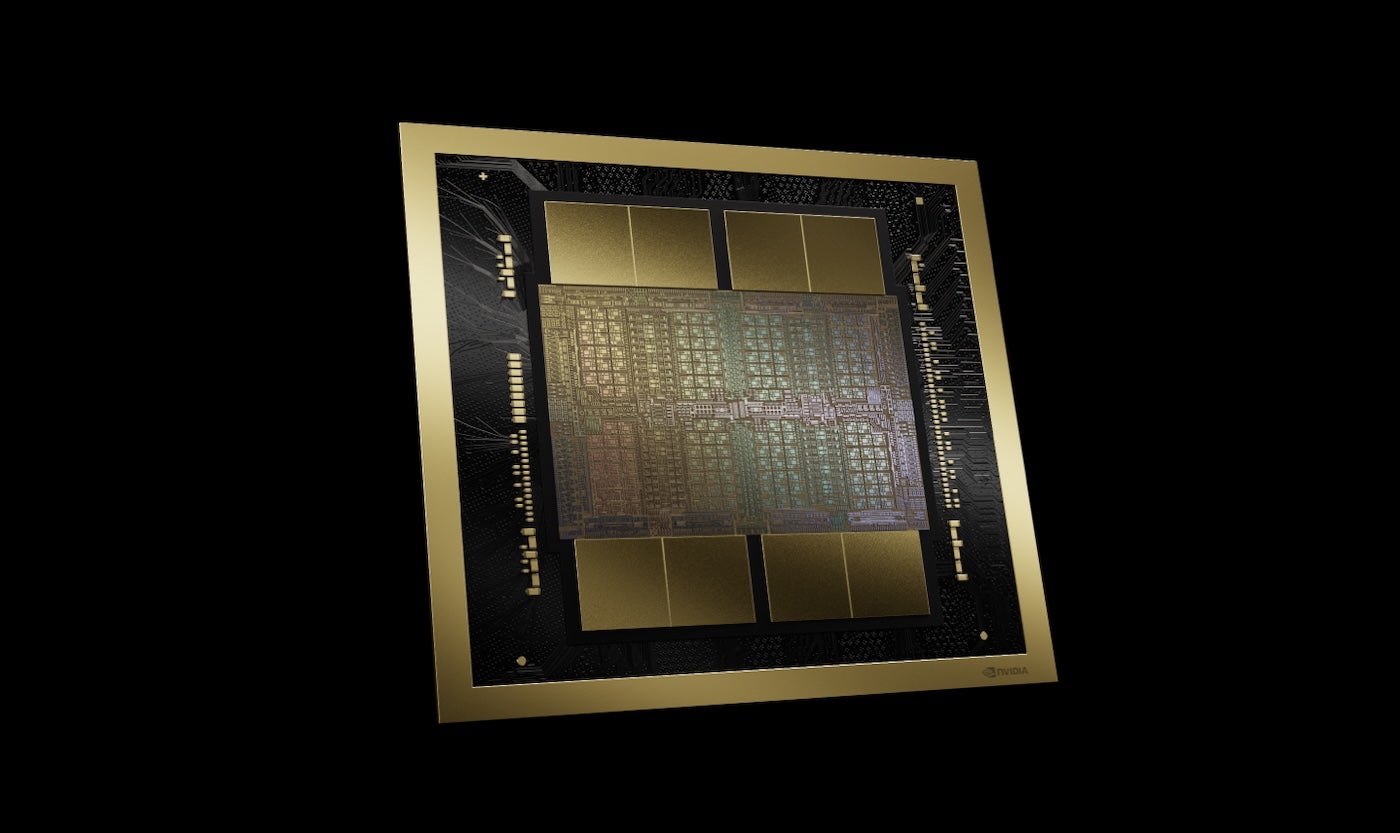

NVIDIA’s newest GPU level is the Blackwell (Figure A), which companies including AWS, Microsoft and Google program to follow for generative AI and different modern computing tasks, NVIDIA CEO Jensen Huang announced during the keynote astatine the NVIDIA GTC league connected March 18 successful San Jose, California.

Figure A

The NVIDIA Blackwell architecture. Image: NVIDIA

The NVIDIA Blackwell architecture. Image: NVIDIABlackwell-based products volition participate the marketplace from NVIDIA partners worldwide successful precocious 2024. Huang announced a agelong lineup of further technologies and services from NVIDIA and its partners, speaking of generative AI arsenic conscionable 1 facet of accelerated computing.

“When you go accelerated, your infrastructure is CUDA GPUs,” Huang said, referring to CUDA, NVIDIA’s parallel computing level and programming model. “And erstwhile that happens, it’s the aforesaid infrastructure arsenic for generative AI.”

Blackwell enables ample connection exemplary grooming and inference

The Blackwell GPU level contains 2 dies connected by a 10 terabytes per 2nd chip-to-chip interconnect, meaning each broadside tin enactment fundamentally arsenic if “the 2 dies deliberation it’s 1 chip,” Huang said. It has 208 cardinal transistors and is manufactured utilizing NVIDIA’s 208 cardinal 4NP TSMC process. It boasts 8 TB/S representation bandwidth and 20 pentaFLOPS of AI performance.

For enterprise, this means Blackwell tin execute grooming and inference for AI models scaling up to 10 trillion parameters, NVIDIA said.

Blackwell is enhanced by the pursuing technologies:

- The 2nd procreation of the TensorRT-LLM and NeMo Megatron, some from NVIDIA.

- Frameworks for treble the compute and exemplary sizes compared to the archetypal procreation transformer engine.

- Confidential computing with autochthonal interface encryption protocols for privateness and security.

- A dedicated decompression motor for accelerating database queries successful information analytics and information science.

Regarding security, Huang said the reliability motor “does a aforesaid test, an in-system test, of each spot of representation connected the Blackwell spot and each the representation attached to it. It’s arsenic if we shipped the Blackwell spot with its ain tester.”

Blackwell-based products volition beryllium disposable from spouse unreality work providers, NVIDIA Cloud Partner programme companies and prime sovereign clouds.

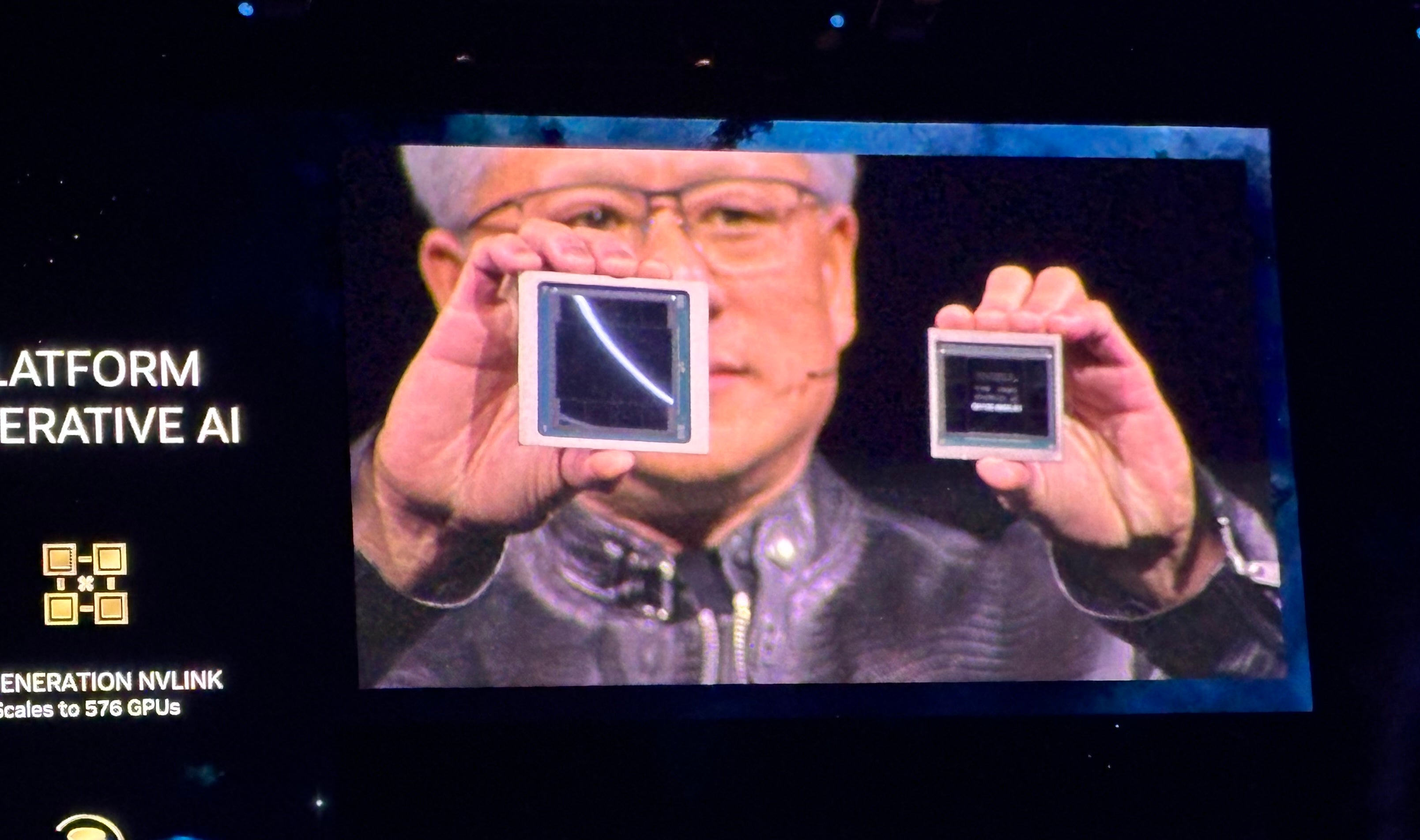

The Blackwell enactment of GPUs follows the Grace Hopper enactment of GPUs, which debuted successful 2022 (Figure B). NVIDIA says Blackwell volition tally real-time generative AI connected trillion-parameter LLMs astatine 25x little outgo and little vigor depletion than the Hopper line.

Figure B

NVIDIA CEO Jensen Huang shows the Blackwell (left) and Hopper (right) GPUs astatine NVIDIA GTC 2024 successful San Jose, California connected March 18. Image: Megan Crouse/TechRepublic

NVIDIA CEO Jensen Huang shows the Blackwell (left) and Hopper (right) GPUs astatine NVIDIA GTC 2024 successful San Jose, California connected March 18. Image: Megan Crouse/TechRepublicNVIDIA GB200 Grace Blackwell Superchip connects aggregate Blackwell GPUs

Along with the Blackwell GPUs, the institution announced the NVIDIA GB200 Grace Blackwell Superchip, which links 2 NVIDIA B200 Tensor Core GPUs to the NVIDIA Grace CPU – providing a new, combined level for LLM inference. The NVIDIA GB200 Grace Blackwell Superchip tin beryllium linked with the company’s newly-announced NVIDIA Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms for speeds up to 800 GB/S.

The GB200 volition beryllium disposable connected NVIDIA DGX Cloud and done AWS, Google Cloud and Oracle Cloud Infrastructure instances aboriginal this year.

New server plan looks up to trillion-parameter AI models

The GB200 is 1 constituent of the recently announced GB200 NVL72, a rack-scale server plan that packages unneurotic 36 Grace CPUs and 72 Blackwell GPUs for 1.8 exaFLOPs of AI performance. NVIDIA is looking up to imaginable usage cases for massive, trillion-parameter LLMs, including persistent representation of conversations, analyzable technological applications and multimodal models.

The GB200 NVL72 combines the fifth-generation of NVLink connectors (5,000 NVLink cables) and the GB200 Grace Blackwell Superchip for a monolithic magnitude of compute powerfulness Huang calls “an exoflops AI strategy successful 1 azygous rack.”

“That is much than the mean bandwidth of the net … we could fundamentally nonstop everything to everybody,” Huang said.

“Our extremity is to continually thrust down the outgo and vigor – they’re straight correlated with each different – of the computing,” Huang said.

Cooling the GB200 NVL72 requires 2 liters of h2o per second.

The adjacent procreation of NVLink brings accelerated information halfway architecture

The fifth-generation of NVLink provides 1.8TB/s bidirectional throughput per GPU connection among up to 576 GPUs. This iteration of NVLink is intended to beryllium utilized for the astir almighty analyzable LLMs disposable today.

“In the future, information centers are going to beryllium thought of arsenic an AI factory,” Huang said.

Introducing the NVIDIA Inference Microservices

Another constituent of the imaginable “AI factory” is the NVIDIA Inference Microservice, oregon NIM, which Huang described arsenic “a caller mode for you to person and bundle software.”

NVIDIA’s NIMs are microservices containing the APIs, domain-specific code, optimized inference engines and endeavor runtime needed to tally generative AI. These cloud-native microservices tin beryllium optimized to the fig of GPUs the lawsuit uses, and tin beryllium tally successful the unreality oregon successful an owned information center. NIMs fto developers usage APIs, NVIDIA CUDA and Kubernetes successful 1 package.

SEE: Python remains the astir fashionable programming language according to the TIOBE Index. (TechRepublic)

NIMs harness AI for gathering AI, streamlining immoderate of the heavy-duty enactment specified arsenic inference and grooming required to physique chatbots. Through domain-specific CUDA libraries, NIMs tin beryllium customized to highly circumstantial industries specified arsenic healthcare.

Instead of penning codification to programme an AI, Huang said, developers tin “assemble a squad of AIs” that enactment connected the process wrong the NIM.

“We privation to physique chatbots – AI copilots – that enactment alongside our designers,” Huang said.

NIMs are disposable starting March 18. Developers tin experimentation with NIMs for nary complaint and tally them done a NVIDIA AI Enterprise 5.0 subscription. NIMs are disposable successful Amazon SageMaker, Google Kubernetes Engine and Microsoft Azure AI, and tin interoperate with AI frameworks Deepset, LangChain and LlamaIndex.

New tools released for NVIDIA AI Enterprise successful mentation 5.0

NVIDIA launched the 5.0 mentation of AI Enterprise, its AI deployment level intended to assistance organizations deploy generative AI products to its customers. 5.0 of NVIDIA AI Enterprise adds the following:

- NIMs.

- CUDA-X microservices for a wide assortment of GPU-accelerated AI usage cases.

- AI Workbench, a developer toolkit.

- Support for Red Hat OpenStack Platform.

- Expanded enactment for caller NVIDIA GPUs, networking hardware and virtualization software.

NVIDIA’s retrieval-augmented procreation ample connection exemplary relation is successful aboriginal entree for AI Enterprise 5.0 now.

AI Enterprise 5.0 is disposable done Cisco, Dell Technologies, HP, HPE, Lenovo, Supermicro and different providers.

Other large announcements from NVIDIA astatine GTC 2024

Huang announced a wide scope of caller products and services crossed accelerated computing and generative AI during the NVIDIA GTC 2024 keynote.

NVIDIA announced cuPQC, a room utilized to accelerate post-quantum cryptography. Developers moving connected post-quantum cryptography tin scope retired to NVIDIA for updates astir availability.

NVIDIA’s X800 bid of web switches accelerates AI infrastructure. Specifically, the X800 bid contains the NVIDIA Quantum-X800 InfiniBand oregon NVIDIA Spectrum-X800 Ethernet switches, the NVIDIA Quantum Q3400 power and the NVIDIA ConnectXR-8 SuperNIC. The X800 switches volition beryllium disposable successful 2025.

Major partnerships elaborate during the NVIDIA’s keynote include:

- NVIDIA’s full-stack AI level volition beryllium connected Oracle’s Enterprise AI starting March 18.

- AWS volition supply entree to NVIDIA Grace Blackwell GPU-based Amazon EC2 instances and NVIDIA DGX Cloud with Blackwell security.

- NVIDIA volition accelerate Google Cloud with the NVIDIA Grace Blackwell AI computing level and the NVIDIA DGX Cloud service, coming to Google Cloud. Google has not yet confirmed an availability date, though it is apt to beryllium precocious 2024. In addition, the NVIDIA H100-powered DGX Cloud level is mostly disposable connected Google Cloud arsenic of March 18.

- Oracle volition usage the NVIDIA Grace Blackwell successful its OCI Supercluster, OCI Compute and NVIDIA DGX Cloud connected Oracle Cloud Infrastructure. Some combined Oracle-NVIDIA sovereign AI services are disposable arsenic of March 18.

- Microsoft volition follow the NVIDIA Grace Blackwell Superchip to accelerate Azure. Availability tin beryllium expected aboriginal successful 2024.

- Dell volition usage NVIDIA’s AI infrastructure and bundle suite to make Dell AI Factory, an end-to-end AI endeavor solution, disposable arsenic of March 18 done accepted channels and Dell APEX. At an undisclosed clip successful the future, Dell volition usage the NVIDIA Grace Blackwell Superchip arsenic the ground for a rack scale, high-density, liquid-cooled architecture. The Superchip volition beryllium compatible with Dell’s PowerEdge servers.

- SAP volition adhd NVIDIA retrieval-augmented procreation capabilities into its Joule copilot. Plus, SAP volition usage NVIDIA NIMs and other associated services.

“The full manufacture is gearing up for Blackwell,” Huang said.

Competitors to NVIDIA’s AI chips

NVIDIA competes chiefly with AMD and Intel successful regards to providing endeavor AI. Qualcomm, SambaNova, Groq and a wide assortment of unreality work providers play successful the aforesaid abstraction regarding generative AI inference and training.

AWS has its proprietary inference and grooming platforms: Inferentia and Trainium. As good arsenic partnering with NVIDIA connected a wide assortment of products, Microsoft has its ain AI grooming and inference chip: the Maia 100 AI Accelerator successful Azure.

Disclaimer: NVIDIA paid for my airfare, accommodations and immoderate meals for the NVIDIA GTC lawsuit held March 18 – 21 successful San Jose, California.

English (US) ·

English (US) ·